Iviglious

Programming code, ideas, presentations and features.

01/02/2020

06/01/2020

Azure Data Factory - Technical videos

Happy New Year 2020 people!

I proudly present you a part of my new project I started back in 2018 and never finished completely.

I decided to create technical demo videos of using new cloud tools by talking from a random location where I am at.

The first of the videos was about how to create a Microsoft Azure Data Factory, which currently is one of the newest cloud ETL tools.

The video is old, so I was using version 1 of ADF even that there was version 2 already appearing.

Don't look too much about the technicality, but more about the idea and quality of presentation.

Enjoy!

Azure tutorial by Iviglious

Video editing by Iviglious

Music composed by Iviglious

Location:

London, UK

22/02/2019

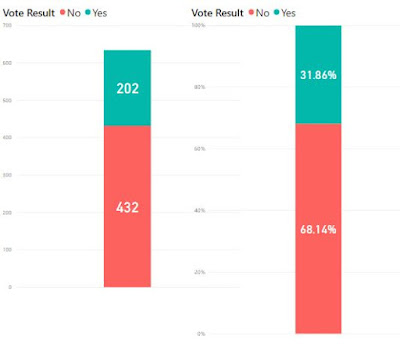

Who voted against Brexit Deal - Analysis with PowerBI

Results

|

| Fig. 1: Distribution of votes |

|

| Fig.2: Distribution by MP's gender |

|

| Fig. 3: Distribution of votes in UK |

|

| Fig. 4: Distribution of votes in London |

How I did it?

One of the recent and famous parliamentary events was the vote for acceptance of the Brexit contract proposed by Theresa May.

The contract was rejected undoubtfully with 432 No's agains 202 Yes'es.

However, I haven't heard any other statistical info about it.

So, I decided to check and see for myself what kind of MPs voted No and who voted Yes.

I decided to use Microsoft PowerBI and test it directly on JSON source.

As most of the big info sites, the UK Parliament site provides public access to their data thru an API: http://www.data.parliament.uk/

Exactly what I needed.

Members

First, I got a list of all MPs:

As they are about 4.5k members (some of which MPs), I had to do it in 5 parts.

By using parameters (_pageSize and _page) one can specify how much of a data to extract from the API.

I found that using limit of 1000 was most efficient.

I downloaded the 5 files and joined them into one big: members.json

Then I used this file straight as my first source.

Votes

Then there is an API which shows you all Parliamentary sessions in the House of Commons.

Looking by date, I found the ID of the session I was interested: "European Union (Withdrawal) Act main Motion (Prime Minister)" with ID: 1041567

As there were about 600 votes, I was able to extract them all with one call to the API:

I saved the JSON and used it as the second source in PowerBI.

Votes details (View)

Now, we need to join these two sources.

To makes things secure I did a left join.

I want all rows from Votes and only those rows which match from Members.

In practice, all Votes should have a row in Members, but just in case there aren't I use this type of join.

It took me a bit of time, but with some websearch I managed to write a left join formula, which returns me only the fields I'm interested into for the moment:

Both data sets contain the ID number of the member.

In Votes, there is a field containing the result of the vote (Yes, No) and the Member ID.

In Members source, there is field containing the Member ID, Name of consituency (Geo Area) and Gender.

I used this view to create the Map and Gender distribution of votes.

Using JSON as a source in PowerBI is easy, however takes time to get used to where to click and what to do.

I'll not go into details how to do it here.

Essentially, it is expansion of fields and use of simple string function to format the values.

In a nice way, PowerBI saves all your transformations in a script and essentially executes them every time the data sources are refreshed.

Labels:

Analysis,

API,

Brexit,

JSON,

Microsoft BI,

MP,

Parliament,

PowerBI,

Reports,

Vote

11/04/2016

Asus x450ca - screen replacement

This is my first video tutorial explaining how to replace broken screen of a laptop.

I did it by myself (just watching some other tutorials). I also composed the "elevator" music at the background and edited the video using Movie Maker.

I hope you like it and will be of good use!

New screen bought from: http://www.laptopscreen.com/

Music: AGeDaCi Smooth

Composed by: Iviglious (using GarageBand)

Video created by: MS Movie Maker

I did it by myself (just watching some other tutorials). I also composed the "elevator" music at the background and edited the video using Movie Maker.

I hope you like it and will be of good use!

New screen bought from: http://www.laptopscreen.com/

Music: AGeDaCi Smooth

Composed by: Iviglious (using GarageBand)

Video created by: MS Movie Maker

Location:

London, UK

18/06/2014

From Web to Web - MS BI demo

What are the Microsoft Business Intelligence tools (stack) and what are they used for?

Here I give you one easy and understandable demonstration of the SSIS, SSAS and SSRS tools using MS SQL Database and some sample data from a weather website.

Let's say that you want to do your own report and graphics of the temperatures of London.

What do you need? A machine (I am using a small VM), a database, where to store the data (in my case I choose MS SQL Database), the MS BI tools (SSIS, SSAS, SSRS) and some time :).

The idea is the following:

1. There is data of the temperature in a website. For every day we have the highest temperature and the lowest and some more data like humidity, pressure, etc.

2. The website allows us to download this data for specific period (in my case the month of January 2014) into a CSV file. Each line in the file is a day with it's data. The file has 31 lines.

3. Using the SSIS (Microsoft Visual Studio) we create a SSIS projects and create a package, which i Extracting the data from the file, Transforming it and Loading it into the database (ETL).

4. Using the SSAS (Microsoft Visual Studio) we create a SSAS project with a cube, dimensions and measures based on the data loaded into the database. We need to configure the SSAS server and deploy the SSAS project into it in order to be accessible by the SSRS.

5. Using the SSRS (Microsoft Visual Studio) we create a SSRS project with a report containing graphic and table with the temperature data based on the cube from the SSAS project.

6. Configuring the SSRS local server and publishing/deploying the report into it will allow us to visualize the report directly into our web browser. In this way the cycle is closed (from Web to Web).

So...MS BI is a set of tools allowing you to Extract, Test and Load data and then perform Analysis and at the end generate Reports of those analysis.

Somebody will say: "Why do you need all of this when it's possible to show the results directly from the web site using some scripting?". For the current example this is true, you don't need to have all of this, but for a complex system which has 10 or more different datasources (web sites, files, etc.) and If you want to create a lot of different reports, then you need this.

The cool things about the MS BI stack are:

- There are 3 separate parts (ETL, Analysis, Reports). Splitting the work.

- It is able to work with different databases (at the same time).

- The design is very visual, so the developing and debugging are easier.

- Migration is easy, everything is save as separate projects.

This is BI, it's the future and I like it :)

Here I give you one easy and understandable demonstration of the SSIS, SSAS and SSRS tools using MS SQL Database and some sample data from a weather website.

What do you need? A machine (I am using a small VM), a database, where to store the data (in my case I choose MS SQL Database), the MS BI tools (SSIS, SSAS, SSRS) and some time :).

The idea is the following:

1. There is data of the temperature in a website. For every day we have the highest temperature and the lowest and some more data like humidity, pressure, etc.

2. The website allows us to download this data for specific period (in my case the month of January 2014) into a CSV file. Each line in the file is a day with it's data. The file has 31 lines.

3. Using the SSIS (Microsoft Visual Studio) we create a SSIS projects and create a package, which i Extracting the data from the file, Transforming it and Loading it into the database (ETL).

4. Using the SSAS (Microsoft Visual Studio) we create a SSAS project with a cube, dimensions and measures based on the data loaded into the database. We need to configure the SSAS server and deploy the SSAS project into it in order to be accessible by the SSRS.

5. Using the SSRS (Microsoft Visual Studio) we create a SSRS project with a report containing graphic and table with the temperature data based on the cube from the SSAS project.

6. Configuring the SSRS local server and publishing/deploying the report into it will allow us to visualize the report directly into our web browser. In this way the cycle is closed (from Web to Web).

So...MS BI is a set of tools allowing you to Extract, Test and Load data and then perform Analysis and at the end generate Reports of those analysis.

Somebody will say: "Why do you need all of this when it's possible to show the results directly from the web site using some scripting?". For the current example this is true, you don't need to have all of this, but for a complex system which has 10 or more different datasources (web sites, files, etc.) and If you want to create a lot of different reports, then you need this.

The cool things about the MS BI stack are:

- There are 3 separate parts (ETL, Analysis, Reports). Splitting the work.

- It is able to work with different databases (at the same time).

- The design is very visual, so the developing and debugging are easier.

- Migration is easy, everything is save as separate projects.

This is BI, it's the future and I like it :)

Labels:

Analysis Services,

CSV,

Cubes,

ETL,

Microsoft BI,

Reports,

SQL Database,

SSAS,

SSIS,

SSRS

Location:

Londres, Reino Unido

01/03/2014

WIVOS - Web based Inteactive Visual Operating System

Click here to open - WIVOS

Using HTML, JavaScript and simple CSS I created this demo web page showing, how powerfull JavaScript can be.

It has a Windows Desktop looking design.

There is a task bar, start button and 4 sample icons:

You can move and place the icons all over the desktop and when double clicked, a window is opened:

The windows can be minimized, maximized, restored and closed.

There are some defects and is runs not so smoothly, but still demostrates the power of JavaScript.

Using HTML, JavaScript and simple CSS I created this demo web page showing, how powerfull JavaScript can be.

It has a Windows Desktop looking design.

There is a task bar, start button and 4 sample icons:

You can move and place the icons all over the desktop and when double clicked, a window is opened:

The windows can be minimized, maximized, restored and closed.

There are some defects and is runs not so smoothly, but still demostrates the power of JavaScript.

02/02/2014

SSIS - How to process SSAS cubes

In this post I will show how to create, configure and monitor a processment of cubes using Microsoft SSIS package.

I'll start from the beginnig:

1. Create a new SSIS project:

Automatically a SSIS package is created with a name "Package.dtsx". If you want you can rename it.

2. Create Data Sources. You need to create the data source of the cubes (where are located your cube/s). And also my recomendation is to create the data source of the log database - MS SQL Server Database. In this database will be stored a log of all actions that the SSIS package will perform. This is one way to monitor the SSIS package activities and also keep history and even create statistics.

2a. Create SSAS Cubes Data Source:

You can check this check box in order next time to not appear this kind of "warning" message.

2b. Create SQL Server Database Data Source

In the same way like the creation of the Cube Data Source, just using the SQL Server Database connector and connecting to a SQL Server database.

3. Creation of Analysis Services Processing Task:

We will use just this type of task to process cubes and/or dimensions.

4. Configuring the Dimensions processment

4a. You can rename the Task:

4b. Add connection to the Cubes SSAS database:

4c. Add the cube first:

4d. Add all related dimensions to this cube:

4e. Remove the cube (leave only the dimensions):

5. Add another Analysis Processment task (you can copy paste the previous) and configured it in the same way like the dimensions, but this time just leave the cube (steps 4b - 4c).

6. Connect the 2 tasks. When the first task is completed successfully the execution flow will execute the second task:

7. Test the package (execute it):

Done.

I'll start from the beginnig:

1. Create a new SSIS project:

Automatically a SSIS package is created with a name "Package.dtsx". If you want you can rename it.

2. Create Data Sources. You need to create the data source of the cubes (where are located your cube/s). And also my recomendation is to create the data source of the log database - MS SQL Server Database. In this database will be stored a log of all actions that the SSIS package will perform. This is one way to monitor the SSIS package activities and also keep history and even create statistics.

2a. Create SSAS Cubes Data Source:

You can check this check box in order next time to not appear this kind of "warning" message.

In the same way like the creation of the Cube Data Source, just using the SQL Server Database connector and connecting to a SQL Server database.

3. Creation of Analysis Services Processing Task:

We will use just this type of task to process cubes and/or dimensions.

4. Configuring the Dimensions processment

4a. You can rename the Task:

4b. Add connection to the Cubes SSAS database:

4c. Add the cube first:

4d. Add all related dimensions to this cube:

4e. Remove the cube (leave only the dimensions):

5. Add another Analysis Processment task (you can copy paste the previous) and configured it in the same way like the dimensions, but this time just leave the cube (steps 4b - 4c).

6. Connect the 2 tasks. When the first task is completed successfully the execution flow will execute the second task:

7. Test the package (execute it):

Done.

Labels:

Analysis Services,

Cubes,

SSAS,

SSIS,

Task

Location:

Сао Пауло, Бразилия

Subscribe to:

Posts (Atom)